Next generation imaging

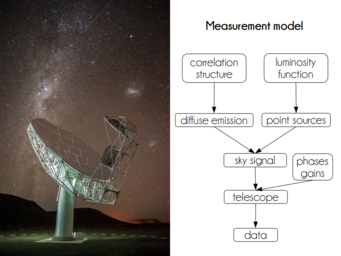

Fig. 1: Probability model of the sky and its observation by a radio telescope. The arrows represent stochastic influences that are ultimately imprinted on the data. When reconstructing the sky signal from the data using NIFTy5, these correlations must be traced backwards in order to deduce causes from the observed effects.

Each day, a large number of astronomical telescopes scan the sky at different wavelengths, from radio to optical to gamma rays. The images generated from these observations are usually the result of a complex series of calculations developed specifically for each telescope. But all these different telescopes observe the same cosmos – possibly just different facets of it. Therefore, it makes sense to standardize the imaging of all these instruments. Not only does this save a lot of work in developing different imaging algorithms, it also makes results from different telescopes easier to compare, allows measurements from different sources to be combined into one common image, and means that advances in software development will directly benefit a larger number of instruments.

The research group on information field theory at the Max Planck Institute for Astrophysics has taken a big step towards achieving this goal of a uniform imaging algorithm by developing and publishing the NIFTy5 software. The research topic of this group, information field theory, is the mathematical theory on which imaging processes are based. Information field theory uses methods from quantum field theory for the optimal reconstruction of images. The latest version, NIFTy5, now automates a large part of the necessary mathematical operations.

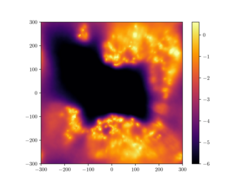

Fig. 2: Galactic dust distribution around the Sun reconstructed with NIFTy5 from data of the Gaia satellite. With the highest spatial resolution reached so far, the figure shows (in logarithmic colour scale) the amount of dust in the galactic plane in an square with two thousand light years on a side. The dark region is the local 'super bubble', an area around the Sun cleared of dust by stellar explosions.

To begin with, the user needs to program probability models of the image signal (see Fig. 1) as well as the measurement. For this, (s)he can rely on a number of prefabricated building blocks, which often simply need to be combined or only slightly modified. These modules include models for typical signals, such as point or diffuse radiation sources, or for typical measurement situations, which may differ in terms of noise statistics or instrument response. From such a 'forward' model of the measurement, NIFTy5 creates an algorithm to 'backwards' calculate the original signal, which results in a computed image. However, since the source signal can never be determined uniquely, the algorithm also provides a quantification of the remaining uncertainties. This is implemented by providing a set of plausible images: the greater the uncertainty in a region, the greater the provided images differ there.

NIFTy5 has already been used for a number of imaging problems, the results of which are published simultaneously. These include the three-dimensional reconstruction of galactic dust clouds in the vicinity of the solar system (see Fig. 2, an animation can be found here), as well as a method to determine the dynamics of fields based only on their observation (see Fig. 3).

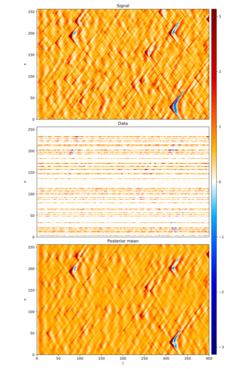

Fig. 3: Reconstruction of dynamic fields and their unknown dynamic laws using NIFTy5.

Top: Space-time diagram of a field in which waves propagate after random excitations. Time runs from left to right and space is plotted vertically. The excitations are visible as triangular structures, where the excitation is located at the top of the triangle and the wave propagates from there.

Centre: Measured values for the field in the top panel at a few discrete locations.

Below: Reconstruction of the field from these measured data only, without prior knowledge of its dynamics. This was also reconstructed from the data. All main structures are found, details may vary due to noise in the data.

On the strength of past experience, NIFTy5 not only allows new, complex imaging methods to be generated much more conveniently, this software package also includes a number of algorithmic innovations. For example, the "Metric Gaussian Variational Inference" (MGVI) was developed specifically for NIFTy5, but can also be used for other machine learning methods. In contrast to conventional methods of probability theory, the implementation of this algorithm in NIFTy5 does not require the explicit storage of so-called covariance matrices. As a result, the memory requirement increases only linearly, not quadratically with problem size, so that also gigapixel images can be calculated without problems.