Manipulative communication in humans and machines

A universal sign of higher intelligence is communication. However, not all communications are well-intentioned. How can an intelligent system recognise the truthfulness of information and defend against attempts to deceive? How can a egoistic intelligence subvert such defences? What phenomena arise in the interplay of deception and defence? To answer such questions, researchers at the Max Planck Institute for Astrophysics in Garching, the University of Sydney and the Leibniz-Institut für Wissensmedien in Tübingen have studied the social interaction of artificial intelligences and observed very human behaviour.

When our brain receives a message, it can include it in its store of knowledge or ignore it. The latter is appropriate, for example, if there are strong doubts about the truthfulness of the message. In this case, the message may say more about its sender than the sender would like. As a consequence of untrustworthy statements, others may judge the sender to be generally untrustworthy and be more sceptical of him in the future. This would reduce the effectiveness of his communication. Therefore, a message sender should be interested in having a good reputation for credibility.

While honesty can indeed build a good reputation, this is not the only way. Cleverly constructed lies that cannot be easily debunked can also be very successful in this regard. They offer the broadcaster the opportunity to disseminate views that are particularly advantageous to him. Because of such advantages, lies and deception have probably been with mankind since it began to speak.

The use of reputation as well as its manipulation should by no means be limited to humans. Virtually all intelligence, be it human, animal, artificial, or even extra-terrestrial, should be confronted with the dilemma of untruthful, misinforming, or even malicious communication, since there are always advantages to deception. Therefore, it is to be expected that in any societies of intelligent beings, the concept of reputation will arise, as well as communication about the reputation of the individual members of the society. Furthermore, individual members will always seek to manipulate their reputation to their own advantage. At least this seems to be the case with us humans.

Reputation and communication about reputation should therefore be universal phenomena in any intelligent society. Understanding their dynamics is important and urgent, also with regard to problematic developments in social media. Here, more people communicate and with greater reach than ever before. Problematic developments such as polarisation and segregation of groups seem to be fuelled by the dynamics of social media.

To investigate such dynamics, Torsten Enßlin and Viktoria Kainz from the Max Planck Institute for Astrophysics, Céline Bœhm from the University of Sydney and Sonja Utz from the Leibniz-Institut für Wissensmedien have created a computer simulation of interacting artificial intelligences, the Reputation Game Simulation. The simulated intelligences, also called "agents", talk to each other, but not necessarily always in an honest way (see Figure 1).

The agents try to find out how honest the other agents are, but also how honest they themselves are. At the same time, the agents strive to convince the others of their own credibility. Helpful for this are upgrading lies about themselves or supportive friends, as well as degrading ones about enemies. However, lying always carries the risk of being caught. In addition to tell-tale signs of lying that cannot always be avoided, agents also pay attention to how plausible a claim is in the light of their own knowledge and use this to assess the credibility of the messages received. Depending on the outcome of this assessment, a message is either accepted as more likely to be true and integrated into one's own knowledge, or more likely to be ignored as potentially misleading. In the latter case, the agent receiving the message will register this as an attempt to deceive by the sender. To keep the complexity of the simulation manageable, the scientists allow the agents only one topic of conversation, namely the honesty of the different agents. So, they gossip with each other and about each other.

Such simulations of gossip are nothing new in the social sciences. What is new in the Reputation Game simulation, however, is the more advanced cognitive system of the agents, which follows information-theoretical principles. The agents have opinions that can be either very firm or quite uncertain. They draw logical conclusions taking into account the uncertainty of the information they receive. However, like us humans, the agents are also subject to limitations in the amount and accuracy of information processing. The agents observe themselves and their environment, and adapt to the prevailing social moods. This allows them, on the one hand, to better recognise typical lies and, on the other hand, to possibly design lies themselves in such a way that they spread more successfully.

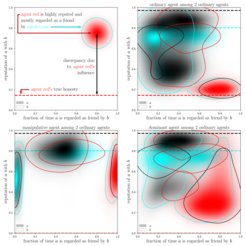

Reputation and friendship statistics of agents in Reputation Game simulations. The relationships of three agents are shown. Agent Black is very honest, Agent Cyan is quite honest, and Agent Red is a chronic liar. The diagram at the top left illustrates how to read the other diagrams. In it, the red area with a cyan border shows how Agent Red was seen by Agent Cyan during one hundred simulations. Agent Cyan would see Agent Red as an honest friend according to this diagram. Agent Red's real honesty is lower and indicated by the red dashed line. The upper right diagram shows the mutual assessment of the three agents when they are all ordinary, i.e. not using any special strategy to raise their reputation, but just lie from time to time. The Cassandra syndrome can be observed here: The actually honest Agent Black is perceived as quite dishonest in many simulations. In the lower diagrams, Agent Red always lies and pursues special strategies. Below left shows how efficiently the strategy of flattery allows him to appear as an honest friend of the others. At the bottom right, Agent Red engages in penetrating self-praising and thus often, but not always, achieves a very high reputation.

Using the Reputation Game Simulation, the researchers tested a number of different communication strategies to see how well they were able to help agents achieve a high reputation. They found that while honesty can be a good strategy, less honest approaches may be more successful (see Figure 2).

The strategy of flattery turned out to be reliably successful in the simulations (see Figure 2, bottom left). This works particularly well when mainly less honest figures are flattered. These will then, in their more frequent lies, give their good buddy, the flatterer, a very good reputation.

Risky, but in many cases extremely successful, is penetrating self-praise, preferably to particularly credible and thus highly respected contemporaries (see Figure 2, bottom right). These use their high credibility to convince the other agents of the sincerity of the self-praising agent. In a considerable number of the simulation runs, notorious liars managed this way not only to appear as extremely honest, but also to be ultimately convinced of their own honesty. The latter is an astonishing self-deception, for the liars register very well each of their own lies. But the strong praise from the crowd of their convinced followers gives them a firm belief in their own goodness.

In the reputation game simulations, therefore, a series of phenomena can be observed that can also be found in a very similar way in sociology and psychology. For example, the occurrence of echo chambers, self-deception, camp formation, toxic as well as frozen social moods, the symbiosis of rogues, and the so-called Cassandra syndrome could be observed. The latter refers to the effect that the most truthful person may be the least believed because their opinion differs too much from the group's belief and is therefore perceived as a lie (see Figure 2, top right).

Admittedly, research on lying machines seems far removed from astrophysics, which is still the research focus for Torsten Enßlin and Céline Bœhm. But even in their astrophysical data analyses, these researchers use automated procedures to identify implausible measuring instruments and misleading data sets. It is to be expected that as the complexity of instruments and databases grows, their machine-calculated reputation will play an increasingly important role. Automated communication about such reputation would then be machine gossip.

Torsten Enßlin is an astrophysicist and information theorist. Viktoria Kainz is a theoretical physicist and consultant for artificial intelligence in industrial applications. Céline Bœhm is an astroparticle physicist and cosmologist. Sonja Utz is a psychologist and media sociologist.